Are Great CEOs Born or Made?

A panel of experts discuss the business world’s preeminent position.

Are Great CEOs Born or Made?Networking has become a daily obsession. According to the Pew Research Center, 74 percent of adults online use social-network sites such as Facebook and LinkedIn. And a 2013 report from Ipsos Open Thinking Exchange estimates that people spend an average of three hours daily on online social networks. That doesn’t count all the networking that takes place offline, at industry cocktail events, alumni reunions, and religious meetings, among other places.

Most of the time, developing and mining a network is a smart move. A person develops social networks hoping and expecting to gain something—whether that be a job, contact, investor, or simply a friend. He may turn to those networks when in need or feeling vulnerable, perhaps when trying to make a risky business decision.

But avid networker, beware: these social networks have a dark side. According to research by Christopher B. Yenkey, assistant professor of organizations and strategy at Chicago Booth, our networks can make us more vulnerable to fraud and even encourage us to cheat. “We shouldn’t be blind to the ways they can make us vulnerable to opportunism or even push us into doing things we shouldn’t do,” says Yenkey. His research findings could help you avoid becoming a victim.

Yenkey teaches a class that focuses on creating value by understanding social networks, of which there are many kinds, he explains to his students. To them, “social networks” refers to the ubiquitous online version, but some networks are comprised of direct relationships, and others include people who are linked by a common bond, such as an alma mater. They are, he tells his students, their “tribes.” And to study such networks, he went to an area of the world where tribal membership is explicit: in Kenya, most residents are members of one of 12 primary ethnic tribes that are important in economics and politics.

Interested in how networks play into frauds, he studied a major financial fraud at the Nairobi Securities Exchange. Starting in late 2006, agents at the country’s largest brokerage, Nyaga Stockbrokerage Ltd., began using clients’ shares to manipulate the market. With electronic access to client accounts, they were able to steal cash and shares, until the massive fraud was discovered in early 2008. At a time when the firm served approximately a fifth of the emerging market’s total investors, its brokers looted approximately one-quarter of the firm’s 100,000 clients’ accounts.

Yenkey was interested in whether tribal affiliations influenced which clients were preyed upon. In a country where 98 percent of all citizens vote with their tribe in elections, and where professional and social relationships are similarly influenced by tribal membership, was there any reason to think a corrupt manager would steal from his own group? Most research and our intuition about markets would predict that a corrupt agent would be more likely to cheat clients outside of his network, his tribe—especially those of a rival tribe. But Yenkey had another hypothesis: if we are more likely to trust people in our network, including those whom we are only tied to indirectly, that assumed trust could become a resource for committing fraud.

If we believe that members of our network are trustworthy, we give them more leeway for taking advantage of that trust.

To test this proposition, Yenkey accessed NSE databases (with regulators’ supervision) comprising detailed information about all of the market’s investors, including names, addresses, gender, portfolio composition, and more. With help from Kenyan research assistants from six different tribes, he used last names to identify which tribes the investors and agents belonged to. The research assistants reviewed and categorized 20,000 unique last names, capturing 94 percent of all investors.

The Nyaga firm was owned and operated by members of Kenya’s largest ethnic group, and approximately 60 percent of its clients belonged to that same tribe. But Nyaga’s client list also included investors belonging to almost all of Kenya’s tribes.

Yenkey compared lists of Nyaga’s clients with a list of investors who had registered claims with Kenya’s Investor Compensation Fund, which tracks victimization in the market, to see who had been cheated in the Nyaga fraud. His findings suggest that belonging to the broker’s tribe actually made people more vulnerable to the fraud. Even worse, investors who lived in areas where their tribal identity was critical to their safety—where being a member of another tribe could have made them targets of violence—were even more likely to be cheated.

Investors, Yenkey saw, initially used their social networks to select brokers, just as earlier research would indicate. That was particularly true in areas where there was more risk to the tribe. In December 2007, Kenya’s disputed presidential election led to intertribal violence that left 1,300 people dead and another 400,000 forced from their homes. In districts that experienced this violence, investors from the tribe that operated the Nyaga firm were about 40 percent more likely to choose a same-tribe broker. The investors were also more likely to choose a same-tribe stockbroker in areas where they were outnumbered by members of other tribes. In most locations the data captured, members of rival tribes avoided hiring stockbrokers from the tribe that ran Nyaga. Just like many of us do, those investors were presumably tapping into their network, their tribe, to find a trustworthy broker.

But quite often those investors got the opposite of what they were seeking. When Yenkey looked at the Nyaga firm’s 100,000 clients, he observed that a corrupt broker was almost 20 percent more likely to steal from a member of his own tribe. And the likelihood of a same-tribe client becoming a victim was almost double that when that client lived in an area affected by the political violence. A corrupt broker was more likely to steal from clients who belonged to his own tribe, “especially when they reside in the diverse and violent areas that led them to choose a same-tribe broker initially,” Yenkey writes. Moreover, wealthy clients were particularly at risk. While earlier research has suggested that criminals tend to avoid wealthy targets, in order to avoid stricter penalties if caught, Yenkey finds that wealthy same-tribe clients of a corrupt Kenyan broker were twice as likely to be cheated as lower-income clients in the tribe.

While most brokers were honest, the corrupt brokers took advantage of the ties that had brought clients to them.

“Essentially, we are vulnerable to being taken advantage of by people in our network because we assume they will take care of us,” Yenkey says. “We ask fewer questions ahead of time, and we are less likely to keep tabs on them later. Most of the time, this works very well for us. But when there is a bad apple in the network, it makes us more vulnerable.”

Yenkey’s research suggests that not only can a social network increase a person’s vulnerability to fraud, it also can increase the chance that he or she may perpetrate one.

To arrive at this conclusion, Yenkey and the University of California-Davis’s Donald Palmer studied the world of professional cycling, which represents another kind of social network. Athletes, owners, managers, assistants, and staff form relationships as they work together, and some may depart at the end of the cycling season to join other teams.

This network has had its own battle with fraud, in the form of performance-enhancing drugs. Cyclists have long used these drugs to better compete in races including the Tour de France, a grueling 2,200-mile race that takes riders through the Pyrenees and the Alps and finishes on the Champs-Élysées in Paris. In the 1920s, riders boosted their energy with cocaine. Race organizers banned drug use in 1965, but in the late 1990s through the mid-2000s, there was rampant use of steroids; human growth hormone; a blood-boosting hormone called erythropoietin, or EPO; and amphetamines to improve performance at high altitudes, accelerate recovery, and provide energy.

In the mid-2000s, the International Cycling Union (officially called the Union Cycliste Internationale, or UCI for short) and the police in Europe began cracking down more seriously on cyclists’ drug use, instituting more and better testing and launching investigations into doping operations. The UCI began requiring cyclists to provide blood and urine samples several times throughout the year. A panel of anti-doping experts tracked each rider’s samples over time and assigned him a “suspicion score,” essentially a medical opinion of the likelihood that a cyclist was using performance-enhancing drugs. These scores, typically kept confidential and used only by racing officials, were in 2011 leaked to the press. Scores for all 198 participants in the 2010 Tour de France were leaked to French sports magazine L’Équipe.

Yenkey and Palmer used the leaked report to study links between cycling networks and fraudulent behavior. The data led them to two main observations.

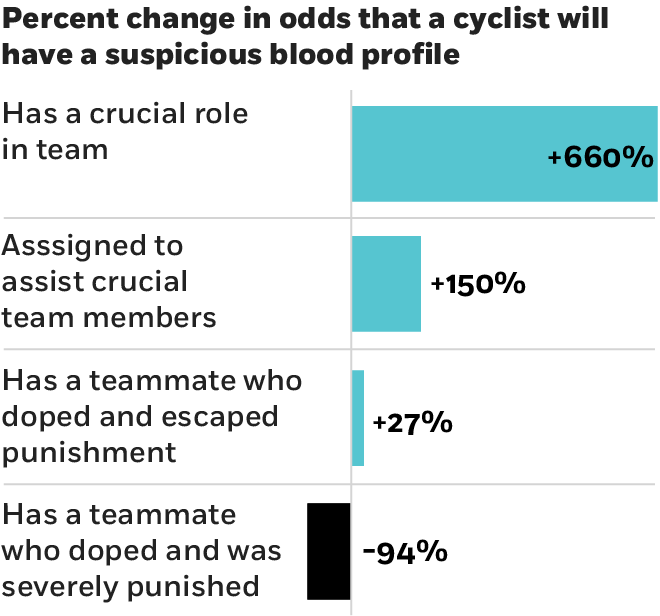

The first is that the role a person has in a network can influence his or her tendency to cheat. People expected to perform complex and difficult tasks at specific points in time are under significant pressure to ensure they can live up to the challenge. This pressure leads them to consider all means available to them, be it legal or illegal. If a person was assigned a critical role on a cycling team, expected to perform a difficult task at a specific time—sprinting, for example—he was almost six times more likely to have a suspicious blood profile than a generalist rider. The cyclists assigned to assist that crucial team member were more than twice as likely than a generalist to have suspicious blood profiles. Those generalist riders, also known as free agents, were no more likely than the average cyclist to be suspected of drug use.

The second observation: people in a network teach others what behavior they can and can’t get away with. Using data from cycling fan websites, the researchers mapped the social networks of 195 of the 2010 Tour de France competitors by tracing the teams they had ridden for throughout their careers. Doing this, the researchers identified the present and past teammates of each rider, as well as what they call “twice-removed teammates,” or the teammates of former teammates (think of these as friends of friends).

The researchers also used data from fan websites that have cataloged more than 1,500 doping incidents that occurred between 1999 and 2010. The incidents included positive drug tests, and evidence of doping from hotel-room raids, phone taps, and vehicle searches. From this data, the researchers reconstructed the cyclists’ full careers, including documented doping incidents and any resulting sanctions.

They find that seeing a fellow rider punished deterred a teammate from committing the same offense. Only 45 percent of prior doping events experienced by people in the riders’ networks led to sanctions, but just over half of the time, those sanctions were major: guilty riders were suspended from competition for more than six months. And the researchers saw that when riders had doped and been sanctioned, their teammates tended to have lower suspicion scores leading up the 2010 Tour de France. The opposite was true for riders whose teammates had doped but had not been sanctioned.

The effect was particularly strong for cyclists whose current teammate had been caught doping. Contemporary teammates were 94 percent less likely to have more-suspicious blood values, while twice-removed teammates were just 8 percent less likely if the prior infraction had been strongly punished. If a cyclist had been caught doping and hadn’t been heavily sanctioned, a contemporary teammate was 27 percent more likely to have a high suspicion score, compared to 3 percent for twice-removed teammates.

The takeaway: people closer to you in your network have more influence on you. You also learn things from people who are more distant in a network, but you do so at a lesser rate.

“If your social network is telling you, ‘Watch out, there are strong consequences for doing this,’ you’re less likely to do it,” Yenkey says. “But if your network contains people who have cheated and basically gotten away with it, it teaches you this is something you can do, and so you do it.”

Frauds are clearly moving through social networks far beyond Kenya and professional cycling. Networks are key to a number of Ponzi schemes, including the Bernard Madoff scandal, where Madoff used social ties to recruit new investors and victims. Before Madoff, Charles Ponzi swindled his Catholic neighbors in Boston, even his own parish priest. The blog ponzitracker.com is updated regularly with news of the latest alleged and confirmed schemes.

The same dynamics that led brokers and cyclists to cheat are at work in networks of all kinds, around the world. According to the 2011 PricewaterhouseCoopers Global Economic Crime Survey, insiders committed 56 percent of all serious corporate frauds reported worldwide.

The research findings, therefore, have broad application. They suggest that to avoid becoming a victim of a fraud, know that while your network can be a great source of information, contacts, and leads, deviants in the network may be looking to take advantage of your trust. Use your network to your advantage, but also with caution.

If you or someone else plays a critical role in an organization—for example as the “rainmaker” responsible for bringing in new business—monitor decisions and actions when the pressure builds. The data suggest that the pressure of meeting expectations can drive people in critical roles to make bad decisions.

And if you find fraud in your network or organization, be vigilant about punishing the wrongdoers. Otherwise, you may encourage more of the same behavior.

A panel of experts discuss the business world’s preeminent position.

Are Great CEOs Born or Made?

Avoid making specific statements, for starters.

From 2020: Five Skills of Good Managers

BlackRock cofounder Sue Wagner joins Chicago Booth’s Marianne Bertrand, Robert H. Gertner, and Luigi Zingales to discuss the business of business.

Should Public Companies Do More Than Maximize Profits?Your Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.